Description

This Project aims to use character level LSTM to generate characters for a given sequence given previous characters in a text file.

Rick: why don't you ask the

Smartest people in the back

of my mind, too.

The did is a bunch of

quickly as parents.

They have been blowin' me up.

One last stop.

Where's the helicopter.

And that's the wa-a-a-ay

that will die.

Screw the inevitable.

That's it, Morty!

He is gone.

Jerry, stop, please, e.

I just wish I got to see more of you, Beth.

I did it to protect the neighborhood.

Take that thing down.

Your grandson

is a shit about your future,

you getting the ruler of all the

reason anyone surviver.

Half of all time?

Damn it!

- Jerry!

- Dad!

- Rick!

- Oh, is that this happening?!

>> rick: Oh, shit, dawg.

Morty!!

>> we got it.

The call's coming from...

♪ inside a kid

all day and, now, I can feel my work.

- Yeah, no.

No, you guys are talking about

and grab a medi-pack.

Listen, Jerry, don't care about earlier.

Yeah, yeah, yeah.

I heard this stalker and star and asking.

Rick, if you want

to prove you'll take it over

mumford & sons.

>> rick: Hey, save your adventures.

Hey, are you tired of real door.

Man, I got pubes,

Commander-in-Queef.

You want to come back.

Change your mind.

I mean, he's a morty.

www.tvsubtitles.net

In local news,

can you take my temperature

because I think I'm pretty jerking

> rick: The ricks are probably

[ laughs ]

clever as you think you a

char rnn trained for 400 epochs on rick and morty subtitles

I started hearing about this term 'machine learning', during my first year of college and was seeing organisations like google, facebook applying it almost everywhere so i considered it to be some kind of magic that only top notch programmers could apply. Little did i know, 2 years after that i would be applying these algorithms myself

Char rnn was one of the first projects i started working on which wasnt just me reading theory and the code and was kinda my starting point to code projects independently without just copy pasting the code and gloating when it worked (which it obviously would :P ). It was also one of the longest projects i worked on, partly because i didnt understand tensorflow that much, and the inspiration which came from this absolutely awesome gist from andrej karpathy and initiated in me a genuine interest in deep learning, moving from idolizing Andrew ng to a wider community of researchers and engineers building different and amazing stuff.

If we look at it from a very Low Resolution perspective, an RNN seems too good to be true. Dealing with language, context matters, so as opposed to a traditional neural network we want to preserve the previous input sequences in memory as opposed to independent variables in an ANN. Below i try to explain how the charRNN works and introduce an improvement- the LSTM. A better explanation and a deeper understanding can be developed by reading this post about RNN and LSTM.

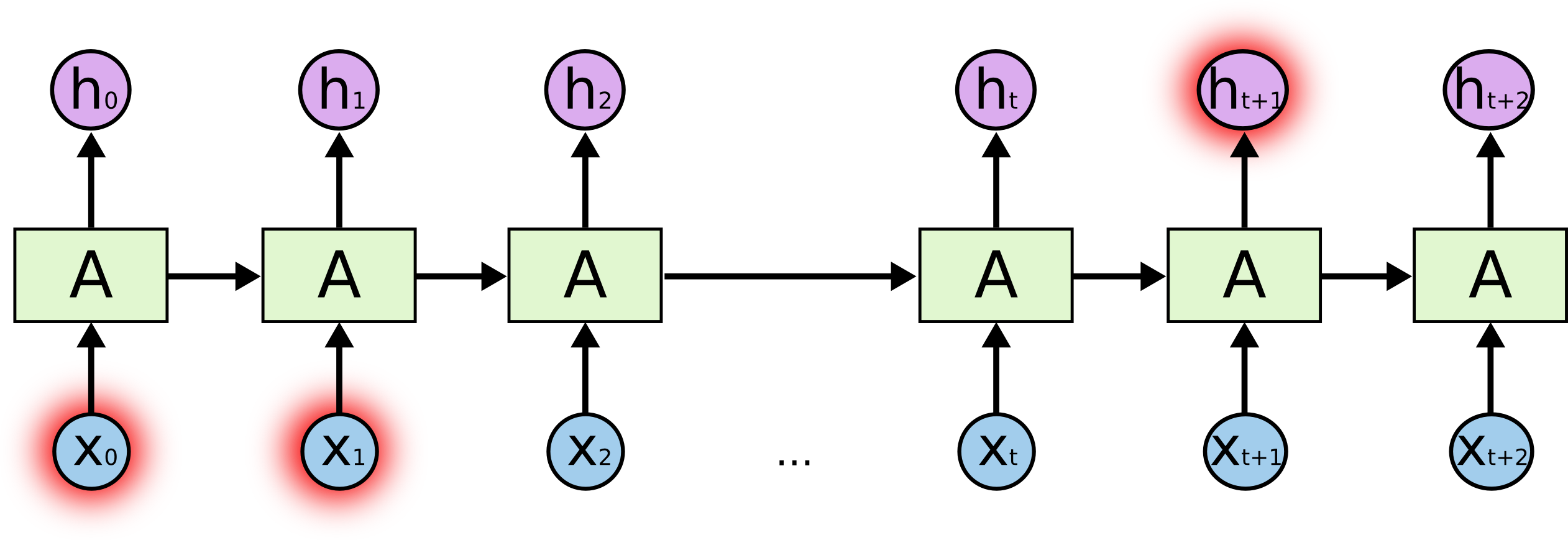

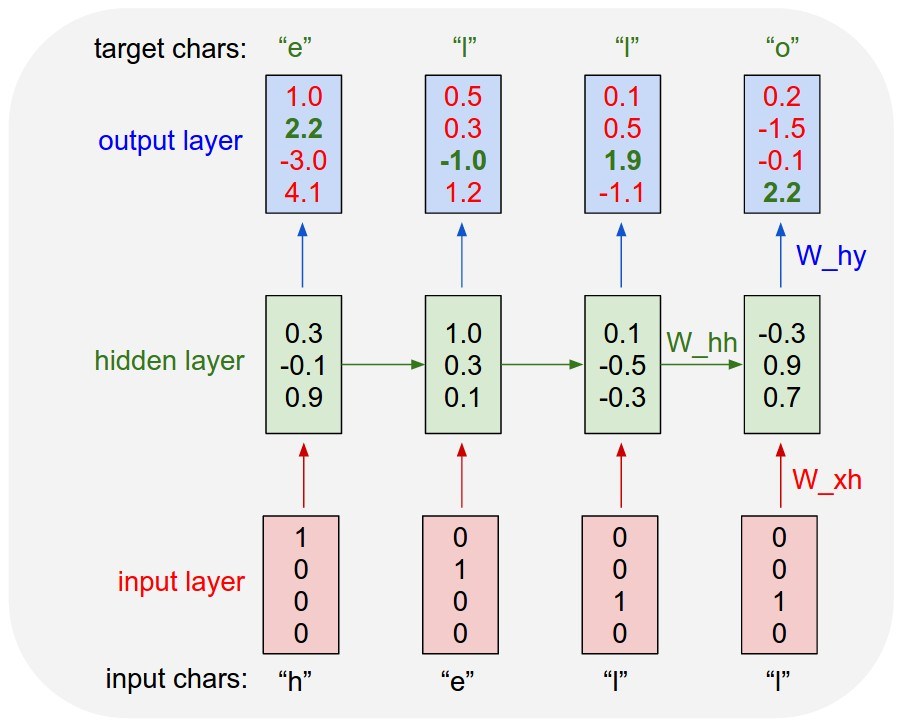

One of the main reasons why recurrent nets are used or even exist is due to the fact that other architectures like ann or cnn are too constrained in their inputs. instead rnn allows us to use sequences of inputs which can be found in real world in many cases be it natural language, image captioning or stocks where the current value is dependent on previous values. A rnn executes as it being unrolled in time steps. This fact is evident from the image below where the hidden layer for the first time step is fed to the second layer and so on. Another important thing to remember is that every time step within a single layer use same weights.

Image Source: The Unreasonable Effectiveness of Recurrent Neural Networks

Image Source: The Unreasonable Effectiveness of Recurrent Neural Networks

In his gist, andrej karpathy uses numpy to implement the char rnn and i wanted to implement this in tensorflow due to well faster training in gpu and well, tensorflow has such a neat way of code organisation where you define a model earlier and then use session to feed variables to this model. I was tired of seeing the same data being used everywhere,'the mini shakespeare', so a constant theme of this blog is that i use examples which are different than the traditional norm being used so that people can generalise the model for their purpose rather than having to read it from a variety of resources. In addition to that, most of my models are trained on jupyter notebook so they can get a cell by cell execution and understand how the algorithm really works. I trained 3 different datasets for my purpose - rick n morty subtitles (cuz why not :P), the generic mini shakespeare and donald trump tweets ( cuz his tweets are interesting)